Deploying NSX Advanced Load Balancer 20.1

- Ramy Afifi

- Jan 30, 2022

- 12 min read

Updated: Feb 23, 2023

Today, applications are no longer just supporting the business, they are the business. The needs for applications to be available across any environments securely and reliably have outpaced the infrastructure that delivers them. That's why companies need to move faster and undergo a digital transformation to leverage the cloud and adopt modern application architecture.

Enterprises have already begun to automate compute and storage to increase speed and scalability while reducing complexity and cost. But load balancers, the critical component to ensure application availability and security, undermine any automation efforts. With traditional load balancing, enterprises find it very difficult to achieve agility in application deployments. Not only is the traditional load balancing approach difficult to automate, scale, and troubleshoot, the complexity of management, upgrades, and migrations can further hamper any digital transformation initiatives.

VMware NSX Advanced Load Balancer (formerly known as Avi Vantage) brings a software-defined, scalable, and distributed modern architecture that best matches the new generation of applications, enabling a next generation architecture to deliver the flexibility and simplicity expected by IT and lines of business. Let's take a closer look at platform architecture & components.

Architecture Components

The platform architecture consists of three core components: Avi Controller, Avi Service Engines, and Avi Admin Console.

The Avi Controller is the “brain” of the entire system and acts as a single point of intelligence, management, and control for the data plane.

The Avi Service Engines handle all data plane operations by receiving and executing instructions from the Controller. The Service Engines perform load balancing functions that manage and secure application traffic, and collect real-time telemetry from the traffic flows. The Avi Controller processes this telemetry and presents actionable insights to administrators on a modern web-based user interface (Avi Admin Console) that provides role-based access and analytics in a dashboard.

The architecture separates the control plane from the data plane, bringing application services closer to the applications. The centralized policy-based approach brings consistent experience across on-premises and cloud environments. The REST API architectural style enables automation, developer self-service, and a variety of 3rd party integrations. And with predictive auto-scaling, the platform can elastically scale on-demand in order to meet unpredictable spikes in demand, expand business regionally or to provide backup during unexpected system failures.

In this post, we will learn how to set up VMware NSX Advanced Load Balancer in a vSphere environment. The information includes step-by-step configuration instructions and suggested best practices. So, let's take a closer look at the lab topology!

Lab Topology

In a typical load balancing scenario, a client will communicate with a virtual service.

A virtual service can be thought of as an IP address that Avi Vantage is listening to, ready to receive requests. In a normal TCP/HTTP configuration, when a client connects to the virtual service IP address, Avi Vantage will process the client connection or request against a list of settings, policies and profiles, then send valid client traffic to a back-end server that is listed as a member of the virtual services pool.

Typically, the connection between the client and Avi Vantage is terminated or proxied at the Service Engine, which opens a new TCP connection between itself and the server. The server will respond back directly to the Avi Vantage IP address, not to the original client address. Avi Vantage forwards the response to the client via the TCP connection between itself and the client.

In this scenario, we are going to setup a virtual service (VS IP 10.1.1.179), listening on port 80 so that end users can connect over HTTP to access a simple web page that’s running on two back-end web servers (10.1.1.189 , 10.1.1.200). The Avi Vantage is going to distribute traffic across the two web servers. So, client traffic coming in is going to go through the virtual service and be distributed to either one of the back-end web servers. So, let's get started!

Preparing for Installation

The following resources are designed to help you plan your NSX Advanced Load Balancer deployment, and effectively manage your virtualization environment.

Downloading the installation file

Download the NSX Advanced Load Balancer OVA file from the VMware Downloads Web Site. VMware NSX Advanced Load Balancer is listed under Networking & Security.

Installing the OVA

Avi Vantage can run with a single Avi Controller (single-node deployment) or with a 3-node Avi Controller cluster for redundancy and high availability. Creating a 3-node cluster is highly recommended for production environment. For a proof-of-concept environment, you can deploy a single Avi Controller.

You can set up the Avi Controller virtual appliance by importing OVA to your vCenter server. Right-click the target host on which you want to deploy the appliance and select Deploy OVF Template to start the installation wizard.

Enter the download OVA URL or navigate to the OVA file.

Enter a name for the Avi Controller virtual machine. The name you enter appears in the vSphere inventory.

Select a compute resource on which to deploy the Avi Controller virtual appliance.

Verify the OVF template details.

Select a datastore to store the Avi Vantage appliance files.

Select a destination network for the source network. Select the port group or destination network for the Avi Controller virtual appliance.

Under Customize template page, complete the deployment details. Enter the Avi Controller Management interface IP Address, default gateway, and the subnet mask.

Verify that all your custom OVF template specification is accurate and click Finish to initiate the installation. The installation might take 7-8 minutes.

Once the Avi Controller virtual appliance is installed, start the virtual machine. The next step is to login to the virtual appliance web console (Avi Admin Console) in order to complete the setup wizard.

Logging to the Newly Created Avi Controller

After you install the Avi Controller virtual appliance, you can use the user interface to complete the set up wizard and perform other installation tasks. From a web browser, navigate to the Avi Admin Console at https://<Avi-Controller-IP>/#!/admin-user-setup/.

Configure the basic system settings:

Administrator account.

DNS and NTP Server information.

Email or SMTP information.

Backup passphrase.

Set the infrastructure type to VMware.

During the initial Controller setup, a vCenter account must be entered to allow communication between the Controller and the vCenter. The vCenter account must have the privileges to create new folders in the vCenter. This is required for Service Engine creation, which then allows virtual service placement.

Enter the vCenter credentials and IP address.

Set the Permissions to "Write". Avi Vantage can be deployed with a VMware cloud in either no access, read access, or write access mode. Each mode is associated with different functionality and automation, and also requires different levels of privileges for Avi Controller within VMware vCenter.

Write access is the recommended deployment mode. It is the quickest and easiest way to deploy and offers highest levels of automation between Avi Vantage and VMware vCenter. This mode requires a vCenter user account with write privileges. Avi Controller automatically spins up Avi Service Engines as needed, and accesses vCenter to discover information about the networks and VMs.

Select the Data Center and choose the Default Network IP Address Management. This is used to assign IP address to data interfaces of Service Engines.

Each Avi Service Engine deployed in a VMware cloud has 10 vNICs. The first vNIC is the management vNIC using which the Avi Service Engine communicates with the Avi Controller. The other vNICs are data vNICs and are used for end-user traffic.

After spinning up an Avi Service Engine, the Avi Controller connects the Avi Service Engine’s management vNIC to the management network specified during initial configuration. The Avi Controller then connects the data vNICs to virtual service networks according to the IP and pool configuration of the virtual services.

The Avi Controller builds a table that maps port groups to IP subnets. With this table, the Avi Controller connects Avi Service Engine data vNICs to port groups that match virtual service networks and pools.

After a data vNIC is connected to a port group, it needs to be assigned an IP address. Avi Service Engine requires an IP address in each of the virtual service networks and server networks. This process is automatic in write access and read access deployment modes.

Select the Management Network for Service Engine management and choose the Management Network IP Address Management settings. This is used to assign IP address to management interface of Service Engines.

For IP allocation method, enter a subnet address and a range of host addresses within the subnet, in the case of static address assignment. Avi Vantage will assign addresses from this range to the Avi Service Engine data interfaces.

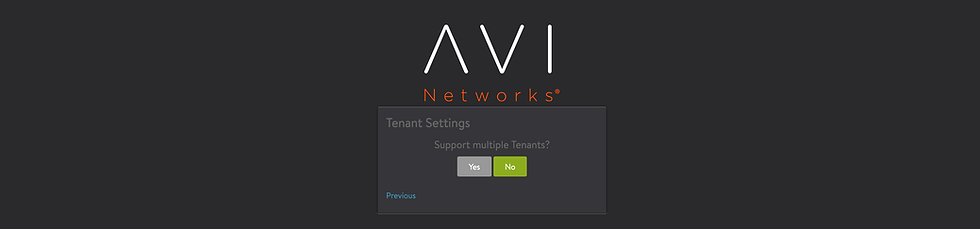

Avi Vantage provides isolation via tenants and roles.

A tenant is an isolated instance of Avi Vantage. Each Avi Vantage user account is associated with one or more tenants. The tenant associated with a user account defines the resources that user can access within Avi Vantage. When a user logs in, Avi Vantage restricts their access to only those resources that are in the same tenant.

If a user is associated with multiple tenants, each tenant still isolates the resources that belong to that tenant from the resources in other tenants. To access resources in another tenant, the user must switch the focus of the management session to that other tenant.

By default, all resources belong to a single, global tenant: admin. The admin tenant contains all Avi Vantage resources. The default admin user account belongs to the admin tenant and therefore can access all resources. If no additional tenants are created, all new Avi Vantage user accounts are automatically added to the admin tenant.

The setup is completed! You will be directed to the Avi Admin Console web UI.

The Avi Controller is now ready for virtual service configuration!

As stated before, a virtual service is a core component of the Avi Vantage load balancing and proxy functionality. Think of a virtual service as an IP address that Avi is listening to. It advertises an IP address and ports to the external world and listens for client traffic.

When a virtual service receives traffic, it may be configured to:

Proxy the client's network connection.

Perform security, acceleration, load-balancing, gather traffic statistics, and other tasks.

Forward the client's request data to the destination pool for load balancing.

A typical virtual service consists of a single IP address and service port that uses a single network protocol. Avi Vantage allows a virtual service to listen to multiple service ports or network protocols. For instance, a virtual service could be created for both service port 80 (HTTP) and 443 SSL (HTTPS). In this example, clients can connect to the site with a non-secure connection and later be redirected to the encrypted version of the site.

It is possible to create two unique virtual services, where one is listening on port 80 and the other is on port 443; however, they will have separate statistics, logs, and reporting. They will still be owned by the same Service Engines because they share the same underlying virtual service IP address.

In this scenario, we are going to setup a single virtual service (VS IP 10.1.1.179), listening for client traffic on port 80, with two back-end servers (10.1.1.189, 10.1.1.200) ready to receive incoming requests.

Creating a Virtual Service

A new virtual service may be created via either the basic or advanced mode. In basic mode, many features are not exposed during the initial setup. After the virtual service has been created via basic mode, the options shown while editing are the same as advanced mode, regardless which mode was initially used. While basic mode may have been used to create the virtual service, it does not preclude access to any advanced features.

Basic Mode — This mode is strongly recommended for the vast majority of normal use cases. It requires minimal user input and relies on pre-defined configurations for the virtual service that should be applicable for most applications. Creating a new virtual service in basic mode can be accomplished within a single popup window.

Advanced Mode — This mode requires additional user input and is recommended when requiring access to less common features, such as policy rules or customized analytics settings. This mode may also be used to configure a virtual service for multiple ports or network protocols.

Let's create our first virtual service using the Basic Mode. On the Dashboard, select Create Virtual Service and select Basic Setup.

Add an HTTP Virtual Service with the parameters below, then click Create:

Virtual Service Name: demo-vs

Virtual IP Address: 10.1.1.179

Application Type: HTTP

Service Port: 80

Server members: add both 10.1.1.189, 10.1.1.200

It may take several minutes to create the components below:

A virtual service named demo-vs

A virtual IP 10.1.1.179

A destination pool with two members (10.1.1.189, 10.1.1.200)

Data plane service engines

Checking the Virtual Service

Verify that the Virtual Service named "demo-vs" has been created by navigating to Applications > Virtual Services.

Checking the Virtual IP

Verify that the Virtual IP has been created by navigating to Applications > VS VIPs.

Virtual Services and Virtual IPs are related but separate things. So, it’s important to highlight the difference between them.

A Virtual IP is a single IP address owned and advertised by a Service Engine. A given IP address [Virtual IP] can be advertised from only a single SE.

On the other hand, a Virtual Service is a Virtual IP plus a specific layer 4 protocol port [or ports] that are used to proxy an application. A single Virtual IP can have multiple virtual services. For example, all the following virtual services can exist on a single Virtual IP:

192.168.1.1:80,443 [HTTP/S]

192.168.1.1:20,21 [FTP]

192.168.1.1:53 [DNS]The Virtual IP in this example is 192.168.1.1. The services are HTTP/S, FTP, and DNS. Thus, Virtual Service for HTTPS is advertised with address 192.168.1.1:80,443, which is the Virtual IP plus protocol port 443.

Checking the Destination Pool

Verify that the destination pool has been created by navigating to Applications > Pools.

A destination pool is a list of servers that are serving a virtual service and ready to accept traffic. Pools maintain the list of servers assigned to them and perform health monitoring, load balancing, persistence, and functions that involve Avi-to-server interaction.

A typical virtual service will point to one pool; however, more advanced configurations may have a virtual service content switching across multiple pools via HTTP request policies or DataScripts. Also, a pool may only be used or referenced by one virtual service at a time.

Checking the Service Engine

Verify that the Service Engines have been created by navigating to Infrastructure > Service Engines.

The newly created Service Engines appear in the vCenter inventory as shown below.

Service Engines are Virtual machines that provide the data path functionality and service workloads that require load-balancing.

Service Engines are typically created within a Service Engine group, which contains the definition of how the Service Engines should be sized, placed, and made highly available. Each Cloud will have at least one Service Engine group. The options within Service Engine group may vary based on the type of cloud within which they exist and its settings, such as no access versus write access mode.

The Virtual Service Tree View

The tree view will expand and you are able to view the Pool Name and Servers within the Pool along with the Service Engines that are currently serving Application Traffic. Additionally, the AVI health score is displayed for the VS, Pool and Pool members. In the context of the Avi Vantage platform the application health score is a computed representation of how well the application is working based on its performance, resource utilization, security and any anomalous behavior. The health score is expressed as a numerical score from 1 to 100.

Let's hover over or click the "health number" of the virtual service. Notice there are 4 components that make up health score:

Performance – speaks for itself, score indicates overall performance of app.

Resource Penalty – this is measuring the amount of resource strain on the Service Engine, may indicate need to scale out.

Anomaly Penalty – this is measuring any anomalies of the application, looking for behavioral issues out of the normal behavior of the application.

Security Penalty – this is considering things like “self-signed” certificates, certificate expiry dates approaching, volumetric attacks (DDoS) or misconfigurations in security posture, etc.

Sending traffic to the Virtual Service

Let's perform a quick test by sending HTTP traffic to the web servers over port 80. Open several web browsers on various devices (Laptops, iPads, or iPhones) and navigate to the virtual service IP address, allowing traffic to go through the elastic load balancer and be distributed to either one of the web servers.

It works! End users are able to connect over HTTP and access the web servers.

Exploring the Virtual Service Metrics

There are different time frames of traffic that can be displayed. The screenshot below is "Displaying Past 6 Hours".

This view shows the complete picture of an application's performance from client to application. It shows average end to end timings and additionally shows the values broken out into Client and Server RTT as well. Parameters like App Response Data Transfer and Total Time is displayed into easy to read graphs. On the right side, additional metrics are able to be graphed for the time period chosen.

The Anomolies section of the analytics graph represents the application performance. If it seems slower than normal, this is extremely helpful to pinpoint where to begin troubleshooting. It gives clear focus on the performance issue belonging with the app team and NOT the Network/LB teams.

Viewing the Virtual Service Logs

Virtual services and pools are able to log client-to-application interactions for TCP connections and HTTP requests/responses. These logs can be indexed, viewed, and filtered locally within the AVI Controller. Logs can be useful for troubleshooting and surfacing insights about the end-user experience and success of the applications.

Avi Vantage automatically logs common network and application errors under the umbrella of significant logs. These significant logs may also include entries for lesser issues, such as transactions that completed successfully but took an abnormally long time.

Let's take a closer look at one of the transactions we just generated.

The Log Analytics area displays a series of pre-built filters that summarize the client logs. This provides an in-depth view of the specific connection log or the HTTP request and response log.

This level of detail on traffic flow is nearly impossible in the legacy LB's, you would need to do TCP dumps, run through wire shark and you still may not find the performance issue with the app.

Summary

VMware NSX Advanced Load Balancer brings a software-defined, scale-out, distributed architecture, enabling a next generation architecture to deliver flexibility, simplicity, and consistency expected by IT staff.

Great read! Digital transformation is crucial for staying competitive, and having high-quality visuals plays a big role in this shift. Whether it’s promotional videos or training content, clear and engaging visuals make a huge impact. I’ve been using free avi editor to create and polish business videos—it’s easy to use and helps enhance clarity, add effects, and ensure your content looks professional across all platforms!